🚨 The results are evaluated on the MathCanvas-Bench. To submit your model, please send the results to this email.

MathCanvas

MathCanvas

While Large Language Models (LLMs) have excelled in textual reasoning, they struggle with mathematical domains like geometry that intrinsically rely on visual aids. Existing approaches to Visual Chain-of-Thought (VCoT) are often limited by rigid external tools or fail to generate the high-fidelity, strategically-timed diagrams necessary for complex problem-solving.

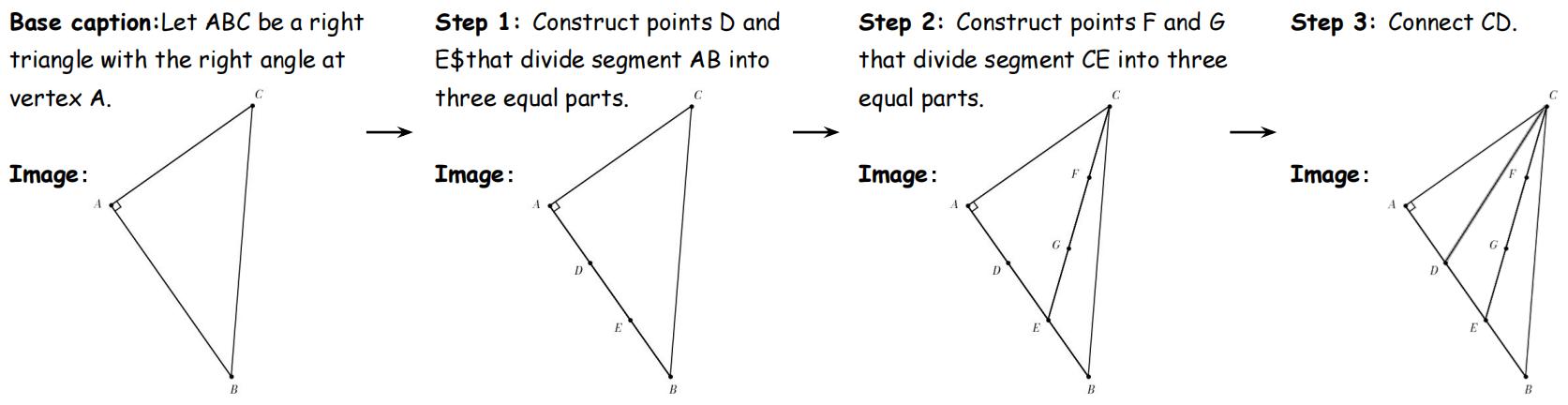

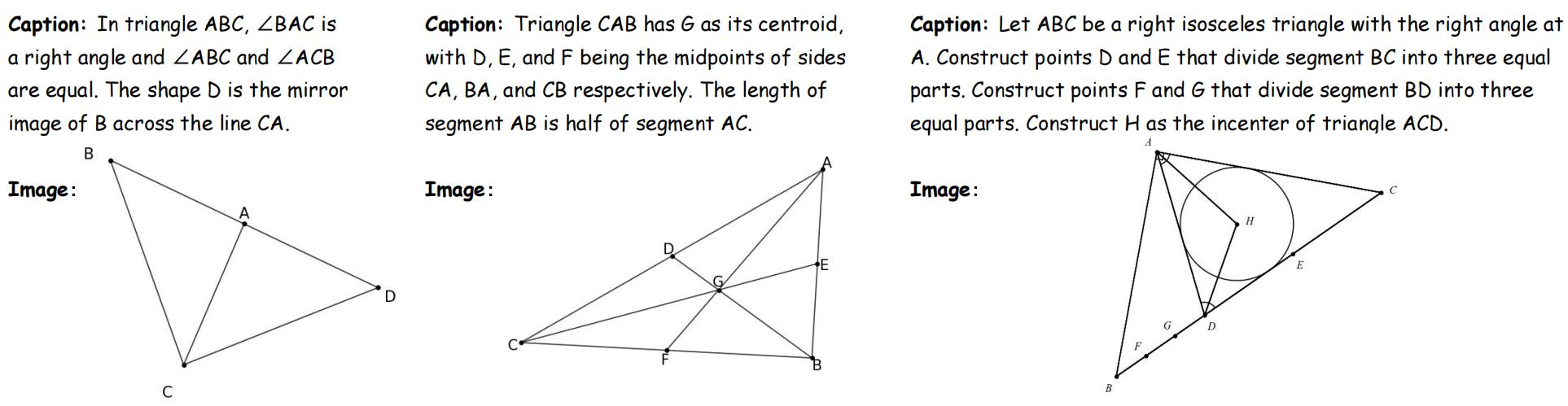

To bridge this gap, we introduce MathCanvas, a comprehensive framework designed to endow unified Large Multimodal Models (LMMs) with intrinsic VCoT capabilities for mathematics. Our approach consists of two phases. First, a Visual Manipulation stage pre-trains the model on a novel 15.2M-pair corpus, comprising 10M caption-to-diagram pairs (MathCanvas-Imagen) and 5.2M step-by-step editing trajectories (MathCanvas-Edit), to master diagram generation and editing. Second, a Strategic Visual-Aided Reasoning stage fine-tunes the model on MathCanvas-Instruct, a new 219K-example dataset of interleaved visual-textual reasoning paths, teaching it when and how to leverage visual aids. To facilitate rigorous evaluation, we introduce MathCanvas-Bench, a challenging benchmark with 3K problems that require models to produce interleaved visual-textual solutions. Our model, BAGEL-Canvas, trained under this framework, achieves an 86% relative improvement over strong LMM baselines on MathCanvas-Bench, demonstrating excellent generalization to other public math benchmarks. Our work provides a complete toolkit—framework, datasets, and benchmark—to unlock complex, human-like visual-aided reasoning in LMMs.

🚨 The results are evaluated on the MathCanvas-Bench. To submit your model, please send the results to this email.

| # | Model | Source | Date | Type | Think | Overall | Algebra | Analytic Geom. |

Calc & Vector |

Plane Geom. |

Solid Geom. |

Stats. | Transf. Geom. |

Trig. | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Complete | Weighted | ||||||||||||||

| 1 | Gemini-2.5-Pro 🥇 | Link | 2025-10-17 | LMM | ✓ | 47.9 | 58.2 | 68.0 | 59.2 | 60.2 | 54.8 | 48.7 | 64.5 | 58.5 | 69.9 |

| 2 | Seed-1.6-Thinking 🥈 | Link | 2025-10-17 | LMM | ✓ | 44.1 | 55.2 | 67.7 | 57.5 | 55.9 | 52.2 | 45.0 | 65.1 | 56.8 | 60.7 |

| 3 | Qwen3-VL-Plus 🥉 | Link | 2025-10-17 | LMM | ✓ | 40.9 | 51.5 | 67.0 | 54.6 | 56.9 | 45.9 | 42.0 | 66.7 | 49.3 | 58.9 |

| 4 | GPT-5 | Link | 2025-10-17 | LMM | ✓ | 43.5 | 51.4 | 68.7 | 55.5 | 64.2 | 45.6 | 36.1 | 64.5 | 42.7 | 66.5 |

| 5 | Gemini-2.5-Flash | Link | 2025-10-17 | LMM | ✓ | 39.3 | 49.5 | 63.2 | 56.5 | 54.6 | 40.7 | 40.7 | 61.1 | 46.8 | 64.6 |

| 6 | GLM-4.5V | Link | 2025-10-17 | LMM | ✓ | 35.6 | 47.8 | 56.9 | 48.8 | 53.4 | 44.1 | 39.2 | 56.4 | 45.8 | 59.8 |

| 7 | Nano-Banana | Link | 2025-10-17 | ULMM | ✗ | 33.2 | 43.7 | 55.4 | 50.2 | 51.8 | 34.5 | 36.6 | 56.7 | 39.4 | 60.4 |

| 8 | Claude-Sonnet-4 | Link | 2025-10-17 | LMM | ✓ | 25.0 | 37.8 | 44.8 | 38.9 | 49.3 | 33.8 | 33.0 | 46.9 | 30.3 | 47.6 |

| 9 | BAGEL-Canvas | Link | 2025-10-17 | ULMM | ✗ | 21.9 | 34.4 | 29.9 | 27.2 | 17.9 | 40.0 | 35.3 | 23.2 | 29.3 | 40.4 |

| 10 | Qwen-2.5-VL-72B | Link | 2025-10-17 | LMM | ✗ | 21.1 | 32.8 | 30.6 | 19.5 | 36.4 | 34.5 | 33.5 | 23.9 | 33.6 | 48.9 |

| 11 | Gemini-2.0-Flash | Link | 2025-10-17 | LMM | ✗ | 21.2 | 32.6 | 39.1 | 32.6 | 38.9 | 31.1 | 25.6 | 51.4 | 28.1 | 38.0 |

| 12 | GPT-4.1 | Link | 2025-10-17 | LMM | ✗ | 19.0 | 30.0 | 40.4 | 30.7 | 37.1 | 24.1 | 25.1 | 54.0 | 21.5 | 42.5 |

| 13 | Qwen-2.5-VL-32B | Link | 2025-10-17 | LMM | ✗ | 15.4 | 27.6 | 29.8 | 27.4 | 27.8 | 27.4 | 27.2 | 27.9 | 20.1 | 30.5 |

| 14 | Keye-VL-1.5-8B | Link | 2025-10-17 | LMM | ✓ | 17.1 | 27.0 | 33.1 | 28.0 | 26.2 | 27.0 | 23.6 | 29.5 | 20.9 | 26.3 |

| 15 | Gemma-3-27b-it | Link | 2025-10-17 | LMM | ✗ | 15.8 | 26.6 | 31.3 | 28.4 | 34.4 | 25.8 | 21.0 | 40.0 | 21.0 | 26.9 |

| 16 | InternVL3.5-8B | Link | 2025-10-17 | LMM | ✗ | 16.7 | 26.4 | 32.3 | 33.8 | 33.8 | 24.2 | 26.9 | 43.7 | 16.2 | 14.9 |

| 17 | GPT-4.1-mini | Link | 2025-10-17 | LMM | ✗ | 14.6 | 26.3 | 35.7 | 30.5 | 36.5 | 22.0 | 22.4 | 24.8 | 19.7 | 30.3 |

| 18 | InternVL3.5-30B-A3B | Link | 2025-10-17 | LMM | ✗ | 11.7 | 22.2 | 22.2 | 19.9 | 15.1 | 24.9 | 24.3 | 22.1 | 17.4 | 18.4 |

| 19 | GPT-4o | Link | 2025-10-17 | LMM | ✗ | 9.9 | 19.4 | 21.6 | 17.7 | 21.8 | 19.5 | 18.6 | 17.4 | 13.2 | 23.0 |

| 20 | Qwen-2.5-VL-7B | Link | 2025-10-17 | LMM | ✗ | 8.9 | 18.7 | 19.5 | 19.0 | 19.2 | 20.6 | 18.7 | 10.7 | 13.9 | 15.0 |

| 21 | BAGEL | Link | 2025-10-17 | ULMM | ✗ | 8.3 | 18.5 | 18.1 | 13.1 | 17.1 | 20.8 | 23.0 | 10.9 | 19.4 | 13.3 |

| 22 | BAGEL-Zebra-CoT | Link | 2025-10-17 | ULMM | ✗ | 8.0 | 16.6 | 18.0 | 15.1 | 15.6 | 18.0 | 16.8 | 20.8 | 11.1 | 14.1 |

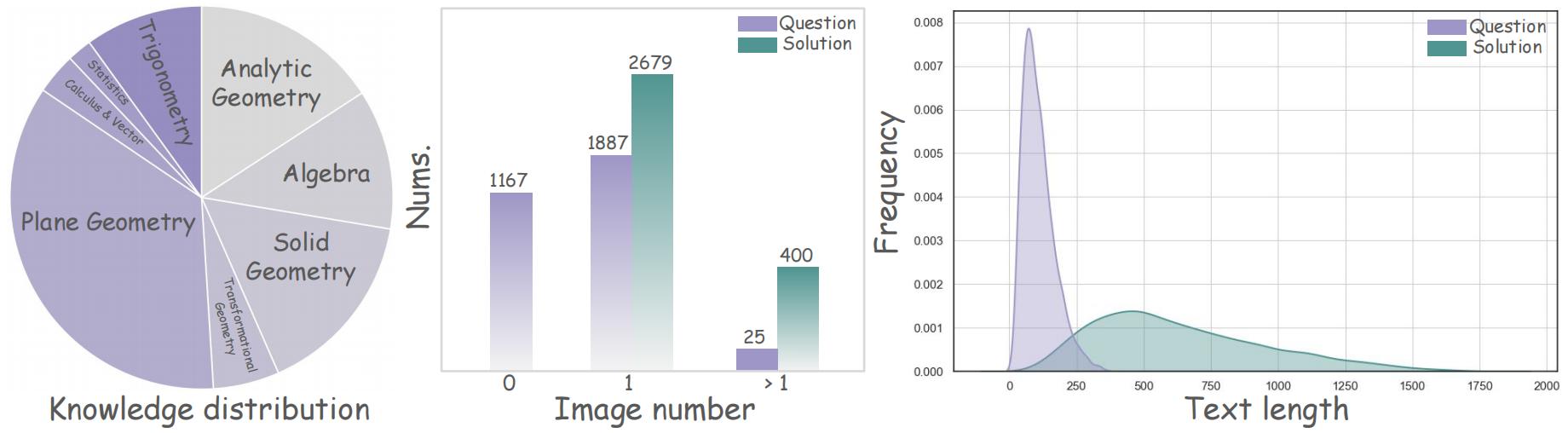

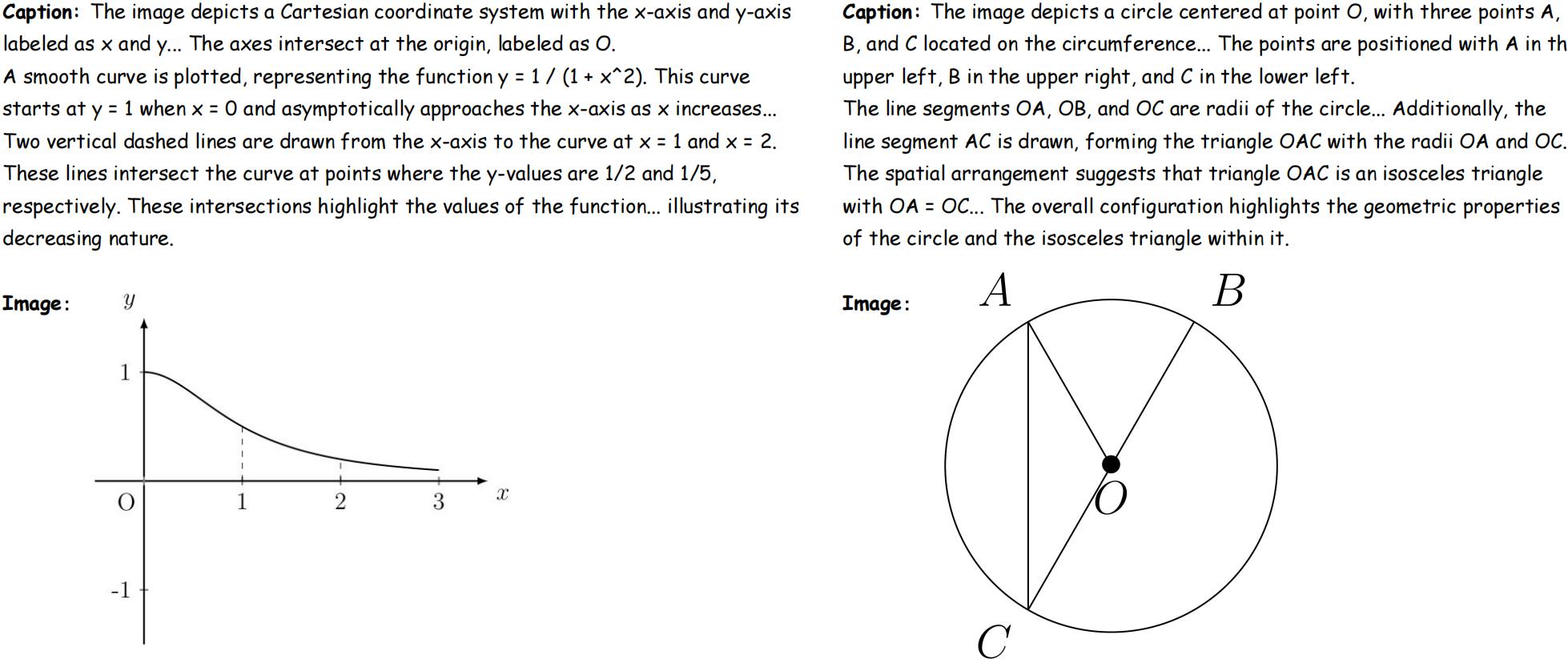

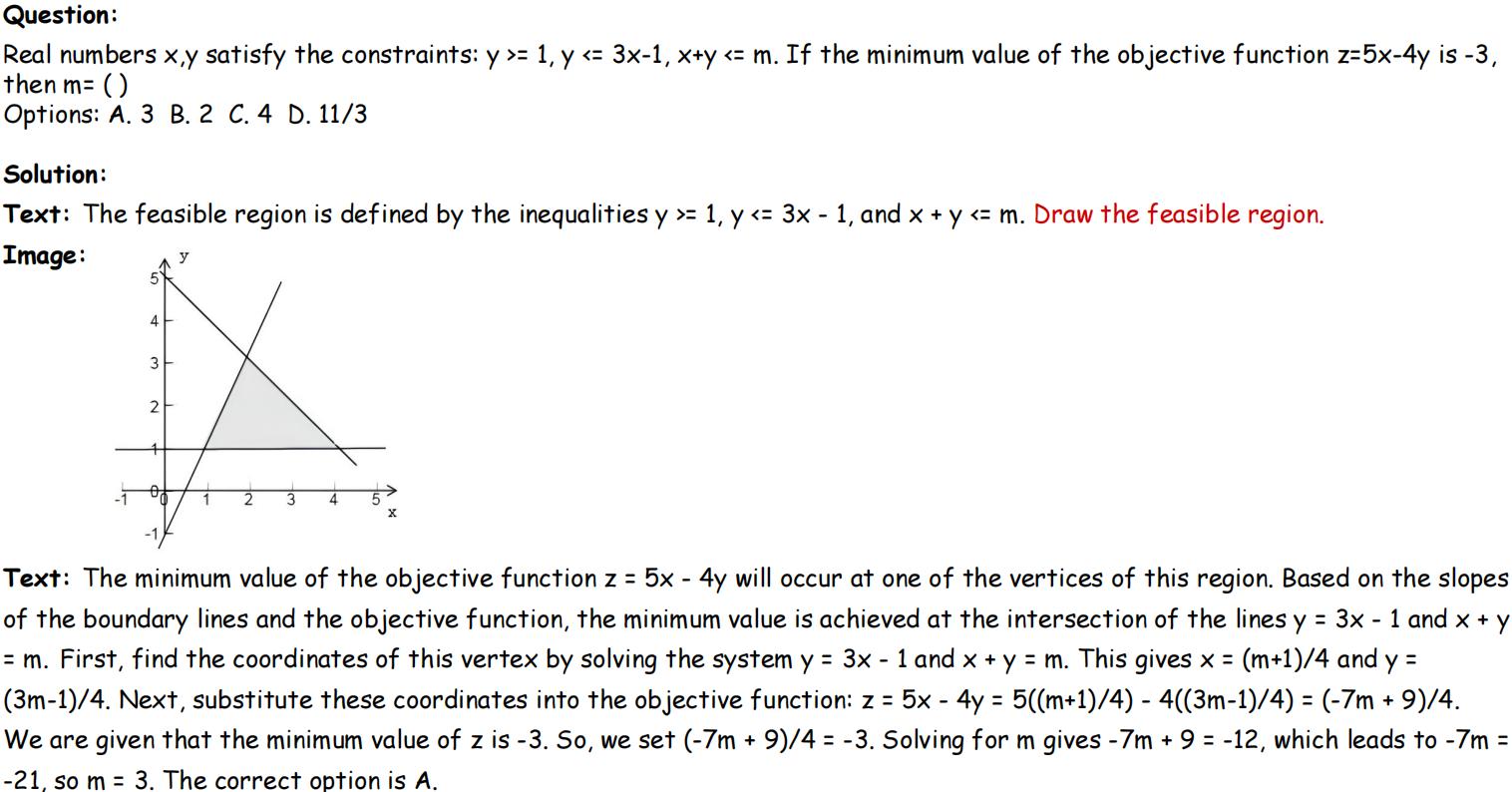

Statistical analysis of MathCanvas-Bench.

Left: Knowledge types distribution.

Middle: Distribution of questions and solutions containing varying numbers of images.

Right: Text length distribution of questions and solutions (measured in text tokens).

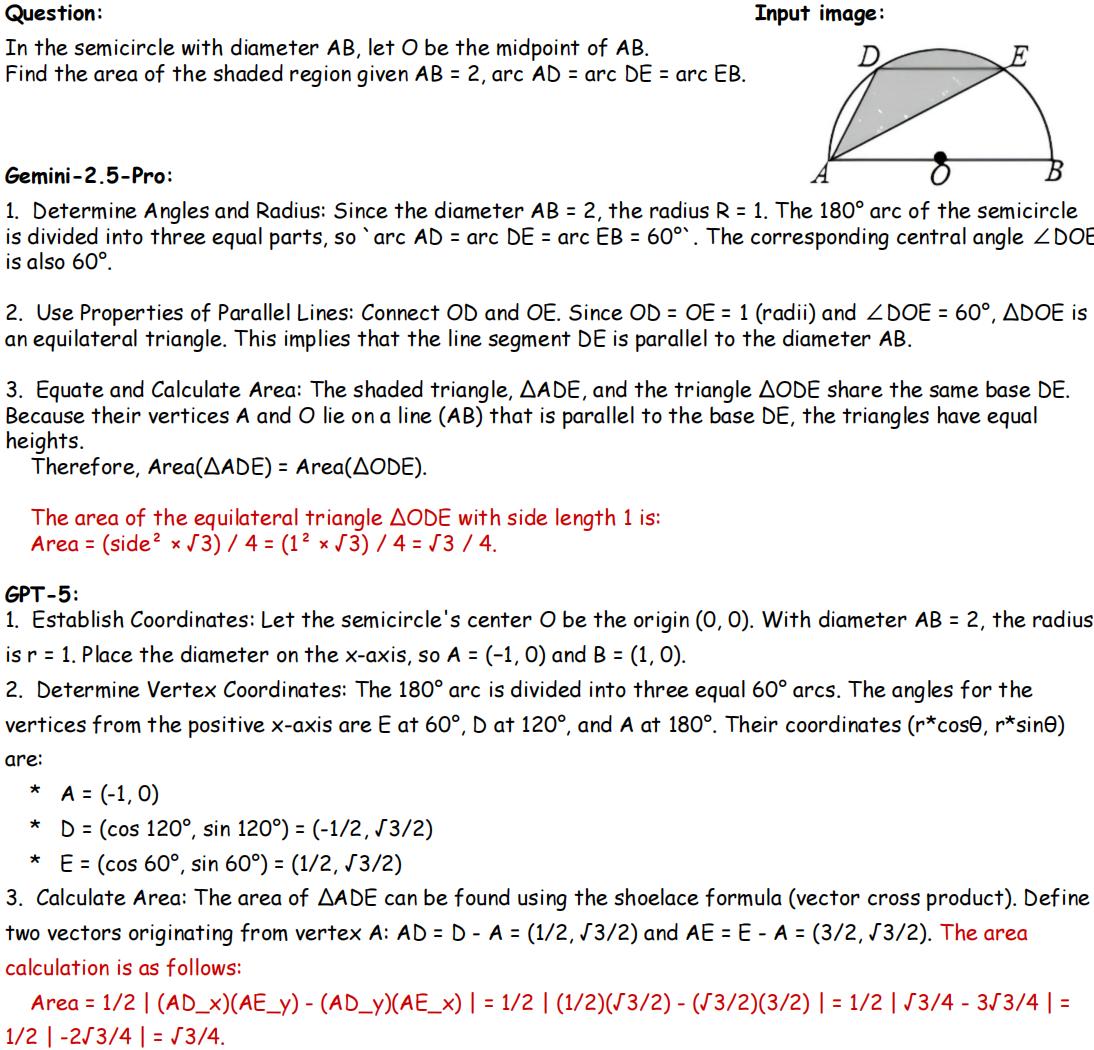

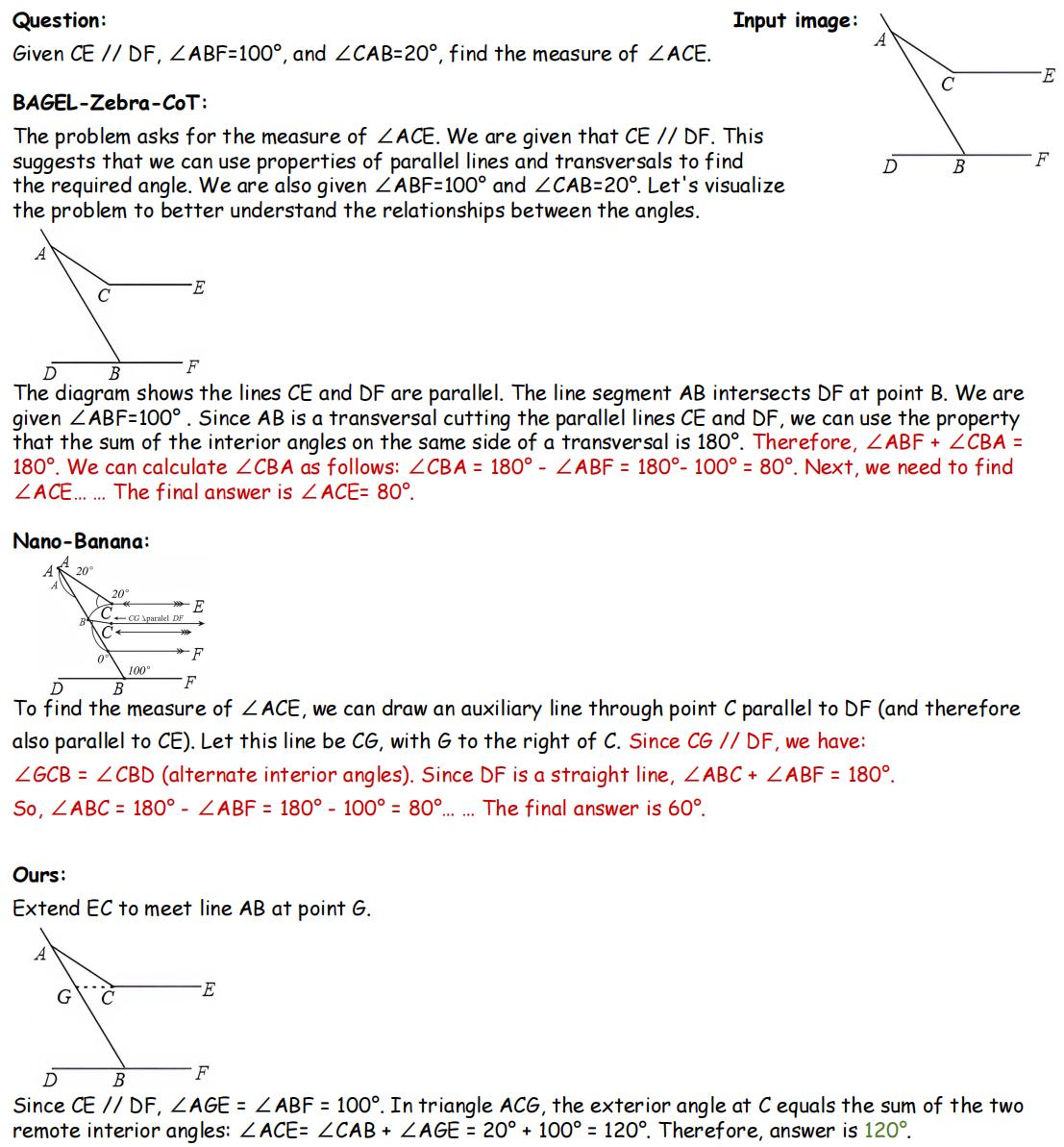

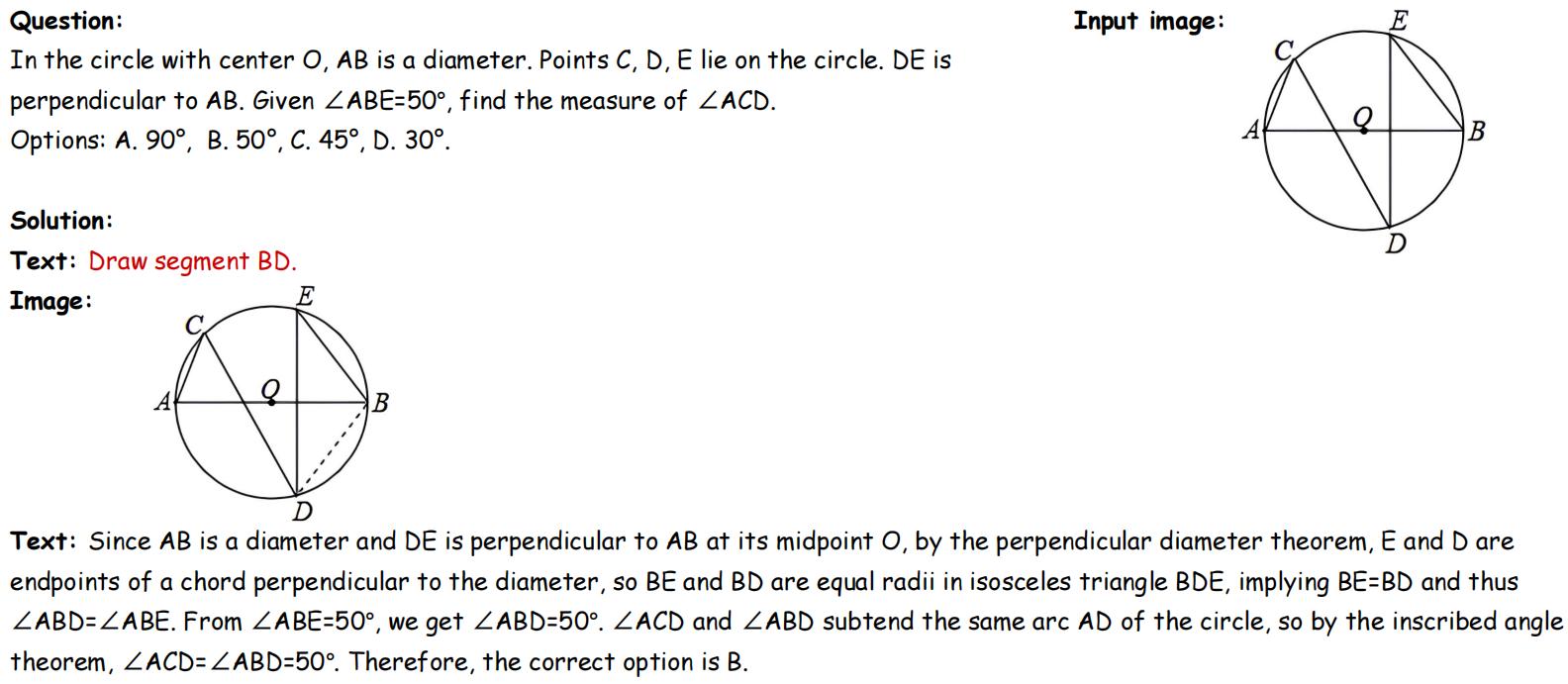

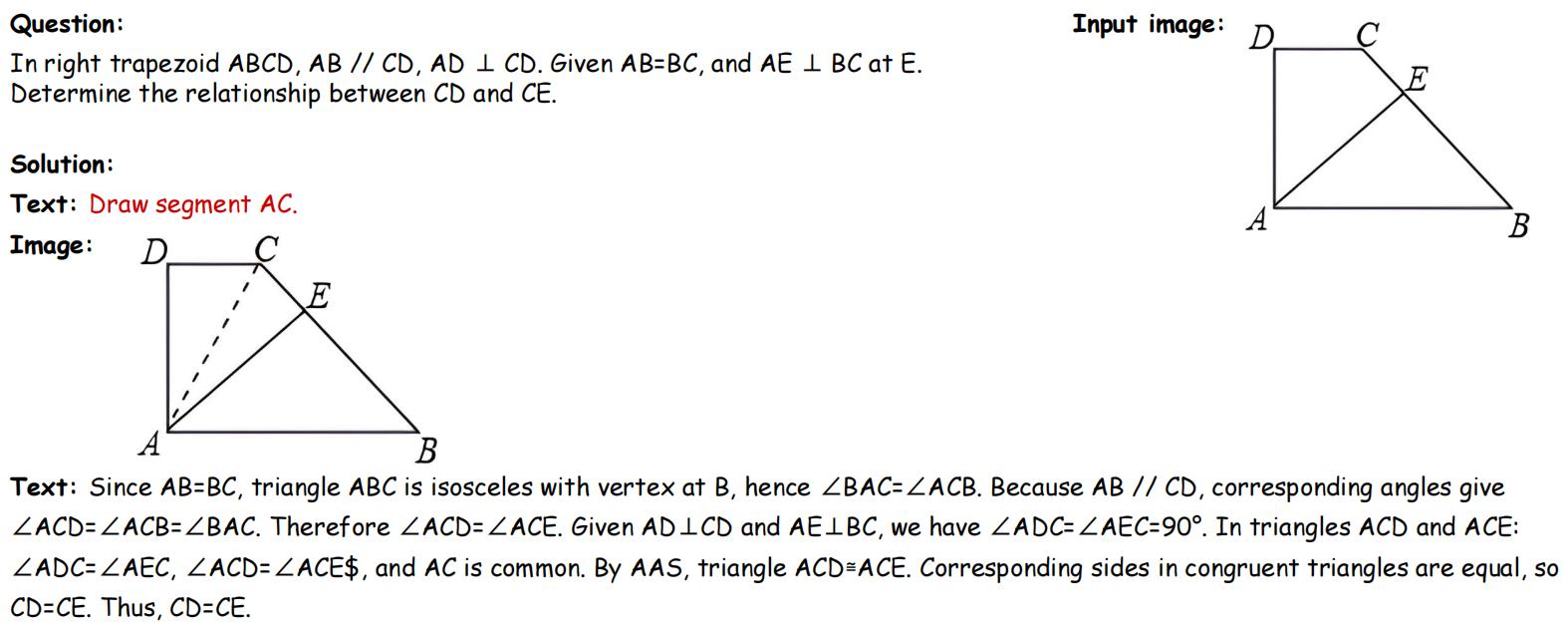

Some sample outputs on MathCanvas-Bench from a range of models, including LMMs (Gemini-2.5-Pro, GPT-5) and ULMMs (BAGEL-Zebra-CoT, Nano-Banana, BAGEL-Canvas).

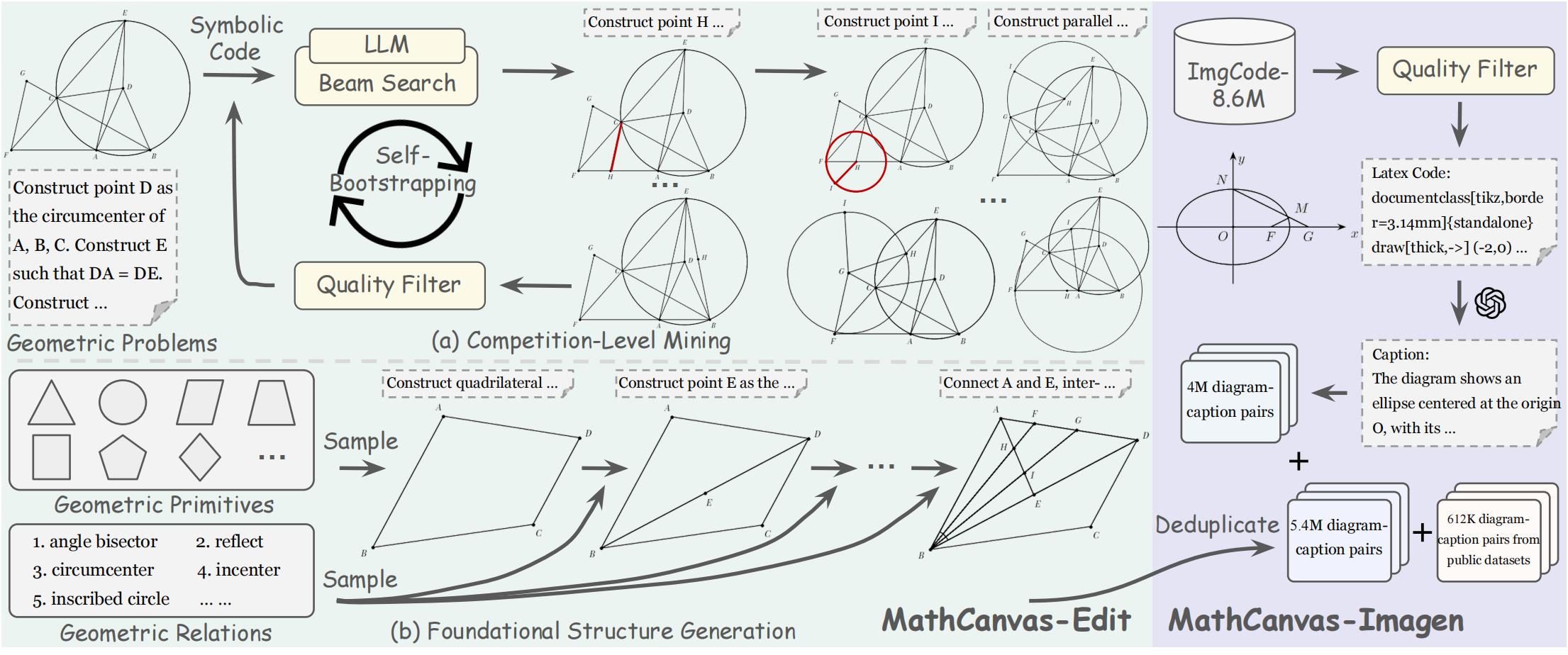

The curation pipeline for the MathCanvas-Edit and MathCanvas-Imagen datasets.

If you have any questions, please raise an issue or contact us at wkshi@link.cuhk.edu.hk.

@misc{shi2025mathcanvasintrinsicvisualchainofthought,

title={MathCanvas: Intrinsic Visual Chain-of-Thought for Multimodal Mathematical Reasoning},

author={Weikang Shi and Aldrich Yu and Rongyao Fang and Houxing Ren and Ke Wang and Aojun Zhou and Changyao Tian and Xinyu Fu and Yuxuan Hu and Zimu Lu and Linjiang Huang and Si Liu and Rui Liu and Hongsheng Li},

year={2025},

eprint={2510.14958},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2510.14958},

}